My vision for this journal is to create the richest community possible around emerging tools and techniques that will shape the future of our world…

Rethinking Research Assessment: Addressing Institutional Biases in Review, Promotion, and Tenure Decision-Making

Authors: Ruth Schmidt is an Associate Professor at IIT’s Institute of Design and Anna Hatch is the program director for DORA. PLOS supports DORA financially via organizational membership, and PLOS is represented on the steering committee of DORA. This blog is part one in a 4-part series.

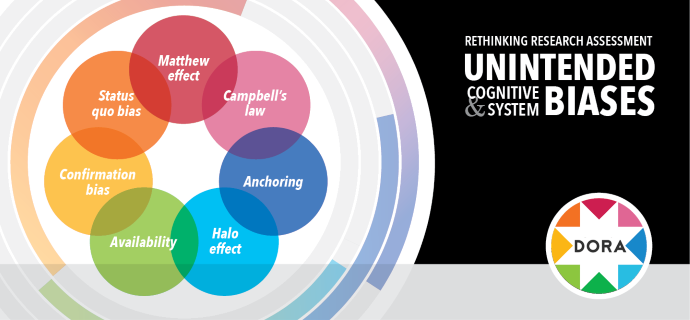

The field of behavioral economics emerged in the 1970s from the confluence of psychology with human judgment and decision-making, grounded in the realization that humans don’t always act “rationally” or in their best interests. Real-word applications of behavioral economics are often focused on consumers and citizens, such as increasing the percentage of adults who receive influenza vaccinations or helping people save for retirement. However, the same decision-making biases that we wrestle with in our personal lives also show up in professional settings. In fact, our misguided assumptions of rationality and expertise in academia may actually make us more prone to cognitive biases than we think. So when making decisions on behalf of others in hiring, review, promotion, and tenure, it is even more important to protect against shortcuts and habits in our thinking.

Although recognizing personal biases is important, bias training that teaches individuals how to become more aware of decision-making tendencies has been shown to have a limited impact on actual behaviors. Instead, creating new structural and institutional conditions — in the parlance of behavioral economists, creating new “choice architectures” to scaffold decision-making processes — is often more effective when attempting to reduce bias in the interest of promoting and supporting more equitable practices.

While not a comprehensive set, we’ve identified four major barriers to equitable decision-making in hiring, review, promotion, and tenure processes that commonly result from biased thinking. In the next 4 weeks we will provide examples of what these barriers are, the specific cognitive biases that feed them, and recommendations for overcoming these barriers at an institutional level. Part one delves into Objective comparisons.

1. “Objective” comparisons are not necessarily equitable.

Qualitative judgments are often notoriously difficult to make, in part because their “apples and oranges” nature makes it challenging to say a single option is truly better.This can lead to well-meaning efforts to reduce complexity, such as using proxies to simplify comparisons. While qualities that can be more easily measured or ranked are appealing because they feel less subjective, the temptation to rely on quantifiable indicators to signal quality has some significant implications on decision-making.

In addition to oversimplifying options in an effort to make them more comparable, the urge to measure things can imbue measurability itself with outsized importance. But as Campbell’s Law has long made clear, once indicators are accepted as a way to gauge value, they start to lose meaning as objective measures. Instead, they increase the temptation to focus on a narrow set of activities and reduce investment in reviewing other meaningful, but less rewarded, achievements. Publishing research in venues with high Journal Impact Factors (JIFs) is a classic example: Due to the perceived importance of citations and “high-quality” publications in promotion and tenure dossiers, decisions about what research to conduct, submit, and publish can lead evaluators to prioritize research with a higher likelihood of getting published in the “right” journals over other forms of impact.

A second form of objectivity bias is the Matthew effect, which describes the tendency forresources to flow to those who already have them. This can occur when a lack of time or motivation to vet results means that decision-makers default to preconceived ideas about what seems good, such as when researchers with long track records of grants receive a disproportionate amount of new funding. Another example of the Matthew effect is when highly cited references become more cited in part becauseresearchers see that they’re highly cited, rather than because they are more relevant or higher quality. This tendency is more likely to increase as our reliance on automation as a means to customize or deliver content grows, such as in social media where popular items are more heavily promoted in newsfeeds or where items recommended by Amazon attract more hits.

Finally, the notion of anchoring — a cornerstone of early behavioral economics research, in which the first piece of data we see or hear defines the reference point against which subsequent data is compared — can create a false sense of objectivity and inadvertently emphasize relative comparisons between options rather than their actual values. For example, receiving a $2 million dollar grant one year may anchor one’s expectations for the value of future grants, causing a $1.2 million grant to feel comparatively disappointing. But anchoring can also manifest in a more general, qualitative context, such as when using one’s own personal life as an “anchor’ to judge others’ experiences.

What can institutions do?

Contextualize data. Relying wholly on quantifiable measures can contribute to a false sense of objectivity. Structured interview and review protocols can reduce inequities in review, promotion, and tenure (RPT) decision-making. Balancing the use of quantitative metrics with qualitative inputs, like narrative CV formats that capture more intangible qualities, can help create a counterweight by contextualizing data points.

Diversify standards. The temptation to anchor on old assumptions of what good researcher performance looks like often arises out of habit. To counter the urge to use a narrow or an anecdotal set of data that emphasize legacy notions of importance, institutions and decision-makers can elicit and select standards gathered from a wider set of sources and based on a more holistic set of values.

Balance quantitative and qualitative measures. The human tendency to bend our behaviors to meet quantifiable targets is stronger than we think. Institutions should actively identify and recognize where setting specific, quantifiable goals may reinforce some behaviors at the expense of others. Institutions should make sure that what is being measured adheres to organizational or disciplinary goals and values, like achieving real-world impact, rather than what is easiest to measure, such as university rankings.

Next Monday we’ll tackle how individual data points can accidentally distract from the whole.